Not every project needs dashboards, tags, and a weekly KPI review. For some teams, analytics is overhead that slows learning, drains attention, and adds cost. If you’re early, tiny, or validating something narrow, it can be perfectly rational to skip analytics—at least for now. Here’s a pragmatic guide to when “no analytics” (or “ultra-light analytics”) is the right call, what risks you’re accepting, and how to know when it’s time to level up.

When skipping analytics actually makes sense

1) You’re searching, not scaling.

Pre-product/market fit efforts are about finding something people truly want. If you’re changing copy daily and the product weekly, precision measurement lags behind the pace of learning. Conversations, waitlists, and revenue-in-hand say more than a perfect funnel.

2) The decision is obvious without numbers.

When a signal is binary—no one clicks, or everyone is confused—qualitative evidence (support threads, recordings, founder-led demos) settles the question faster than instrumentation.

3) The stakes are tiny.

A hobby site, a one-week landing page test, or a one-off event doesn’t justify a tracking stack. Spend that hour writing better copy, emailing prospects, or shipping the feature.

4) You have a single success criterion.

If your only question is “Did we sell 50 units by Friday?” you can read that off your payment system or inbox. You don’t need cohorts, UTM governance, or multi-touch attribution to make the call.

5) Privacy constraints are strict and the audience is small.

For sensitive contexts (health, education, internal tools), collecting less can be a feature. User interviews, opt-in surveys, and server logs may be the right ceiling for a while.

When “no analytics” is a mistake

- Paid acquisition above a trivial budget. If money is on the line, flying blind wastes it.

- Complex funnels with delayed conversion. Content → trial → contract? You need at least a skeleton view or you’ll optimize the wrong step.

- Teams beyond a few people. Once decisions are shared, anecdotes don’t scale. You’ll talk past each other without common facts.

- Recurring optimization problems. If the same debate resurfaces monthly (pricing, onboarding, churn), the debt from not measuring compounds.

A simple decision table

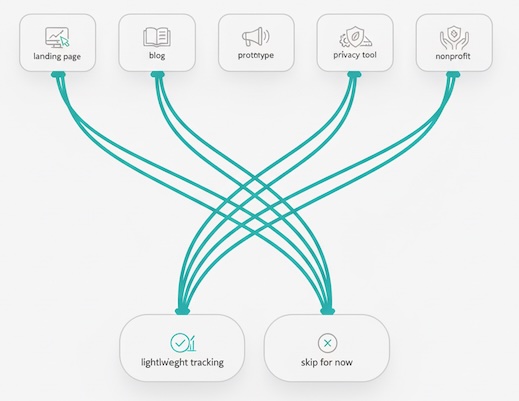

Use this to sanity-check whether analytics is worth it right now. If more boxes land in the middle column, you can probably go without (temporarily). Keep the “Revisit trigger” on your calendar.

| Context | Primary goal | Go without analytics if… | Minimal sanity check | Revisit trigger |

|---|---|---|---|---|

| Pre-launch landing page | Validate demand | You just need 100 signups to proceed | Count signups and reply rate in your email tool | You’ll spend on ads or run A/B tests |

| Indie blog or portfolio | Publish, learn voice | You’re not monetizing or selling | Track posts published and inbound emails | You pitch sponsors or sell products |

| One-week promo | Clear inventory | Success is units sold this week | Orders and refunds from checkout | Plan a similar promo next month |

| Internal prototype | Get stakeholder green light | 5–10 people decide go/no-go | Meeting notes and a short feedback survey | Rollout to >50 users |

| Privacy-sensitive tool | Maintain trust | Users expect minimal data collection | Opt-in survey + support logs | You need to scale onboarding or paid growth |

| Small nonprofit site | Inform and accept donations | Donations are occasional, small volume | Monthly donation count and bank report | Apply for grants or run paid campaigns |

What you still need if you skip analytics

Even in a numbers-light strategy, keep these three guardrails:

- A single, written goal per period. One sentence, one number, one deadline. “Book 5 paid pilots by June 30.” This prevents scope drift.

- A weekly pulse check. A short, human readout: what happened, what we learned, what we’re changing next week.

- A source of truth for outcomes. Payments, CRM, or your email inbox—somewhere undeniable that answers “did we win?”

The risks you’re accepting (and how to cap them)

- Misattribution. You won’t know which channel or message worked.

Cap it: Write down what you think caused the outcome and why. Treat it as a hypothesis, not a fact. - Overconfidence in anecdotes. The loudest story wins.

Cap it: Sample at least 5 conversations before deciding. If possible, get a dissenting view. - Blind spots. You might miss a slow leak (e.g., churn creep).

Cap it: Do a monthly “vitals” review: revenue, active users, refunds/complaints. - Harder handoff later. Retro-fitting analytics is messier.

Cap it: Keep a running list titled “Metrics we’ll want post-MVP.” Future you will thank you.

If you track just one thing…

Pick the metric that most directly reflects value created for users and the business. A few examples:

- Service business: Paid meetings booked per week.

- Consumer app: 7-day returning users (count, not rate).

- E-commerce experiment: Contribution margin dollars from the promo.

- Newsletter: Replies to your call-to-action (not just opens).

- Community: Net new active contributors this month.

If it doesn’t change your next decision, it’s not the one thing.

Qual beats quant when you’re early

Numbers tell you what is happening; only people tell you why. When you’re small:

- Do five user calls for every “report” you might build.

- Save quotes that capture jobs, fears, and language; they’ll guide copy and product far better than a heatmap.

- Watch two sessions end-to-end (live or recorded) each week. Friction screams when you see it.

Signs it’s time to add lightweight analytics

Consider graduating from “none” to “some” when:

- Money moves to media. You’re putting real budget into ads or sponsorships and need to stop guessing.

- You repeat a playbook. If you’ll run similar campaigns again, measuring deltas has compounding value.

- Team grows. New stakeholders need shared facts to align quickly.

- Decisions stall. If debates loop, instrumentation can break ties.

“Lightweight” doesn’t mean a martech buffet. It means the smallest set of metrics that consistently settle arguments.

Common myths to ignore

- “Serious teams always measure everything.” Serious teams measure what changes their next action. Everything else is vanity.

- “If we don’t track now, we’ll never catch up.” You can add analytics later. Capture outcomes and key dates in the meantime to reconstruct baselines.

- “Executives only trust dashboards.” Executives trust clarity. A crisp narrative with undeniable outcomes beats a dense chart any day.

A one-page review template (keep it simple)

Use this after any small project or campaign—no dashboards required.

Objective (one line):

What outcome did we want, by when?

Outcome (facts only):

What happened? Numbers from the source of truth.

Evidence we relied on:

Conversations, emails, purchase logs, support tickets.

What we learned:

Two insights we’d bet on again.

What we’ll change next time:

One behavior, one message, or one audience tweak.

Revisit date:

When we’ll decide whether to add analytics.

Bottom line

Analytics is a tool, not a virtue. If measurement won’t change your next move—or your project is too small, fast, or sensitive—skipping it can be the smartest choice. Write down a single goal, review outcomes weekly, keep a truthful ledger, and listen to users. When the cost of not knowing starts to exceed the cost of knowing, that’s your cue to turn the dials on.

Leave a Reply